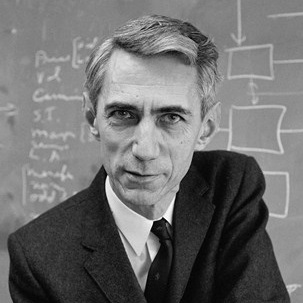

I’ve always been a follower of Claude Shannon and the incredible work he did regarding Communication Theory (i.e. signal/noise) while at Bell Labs. He knew enough to refrain from over-explanations — and in doing so he also invented the broader discipline of Information Theory. He coined the term ‘bit’, and was just as influential to computers and information networks as Alan Turing. He built the first juggling robot.

I just finished reading a quite comprehensive history of this subject — The Information by James Gleick. From Amazon’s description: “We live in the information age. But every era of history has had its own information revolution: the invention of writing, the composition of dictionaries, the creation of the charts that made navigation possible, the discovery of the electronic signal, the cracking of the genetic code. In ‘The Information’ James Gleick tells the story of how human beings use, transmit and keep what they know. From African talking drums to Wikipedia, from Morse code to the ‘bit’, it is a fascinating account of the modern age’s defining idea and a brilliant exploration of how information has revolutionised our lives.”

There’s also a great (and greatly simplified) video essay below about his work by the fantastic Adam Westbrook.

April 30th 2016 marks the centenary of his birth, and there are a number of celebrations marking this event. Many of his seminal papers (including the crucial A Mathematical Theory of Information) are available here.

Admittedly, his work sounds a little dry, however — along with John von Neumann and George Boole — his work ushered in the digital revolution as we know it today, and will continue to influence how we think about computers well into the future.

via amazon.co.uk and delve.tv

0 comments

Write a comment